How to Create a Data Analysis Plan: A Detailed Guide

by Barche Blaise | Aug 12, 2020 | Writing

If a good research question equates to a story then, a roadmap will be very vita l for good storytelling. We advise every student/researcher to personally write his/her data analysis plan before seeking any advice. In this blog article, we will explore how to create a data analysis plan: the content and structure.

This data analysis plan serves as a roadmap to how data collected will be organised and analysed. It includes the following aspects:

- Clearly states the research objectives and hypothesis

- Identifies the dataset to be used

- Inclusion and exclusion criteria

- Clearly states the research variables

- States statistical test hypotheses and the software for statistical analysis

- Creating shell tables

1. Stating research question(s), objectives and hypotheses:

All research objectives or goals must be clearly stated. They must be Specific, Measurable, Attainable, Realistic and Time-bound (SMART). Hypotheses are theories obtained from personal experience or previous literature and they lay a foundation for the statistical methods that will be applied to extrapolate results to the entire population.

2. The dataset:

The dataset that will be used for statistical analysis must be described and important aspects of the dataset outlined. These include; owner of the dataset, how to get access to the dataset, how the dataset was checked for quality control and in what program is the dataset stored (Excel, Epi Info, SQL, Microsoft access etc.).

3. The inclusion and exclusion criteria :

They guide the aspects of the dataset that will be used for data analysis. These criteria will also guide the choice of variables included in the main analysis.

4. Variables:

Every variable collected in the study should be clearly stated. They should be presented based on the level of measurement (ordinal/nominal or ratio/interval levels), or the role the variable plays in the study (independent/predictors or dependent/outcome variables). The variable types should also be outlined. The variable type in conjunction with the research hypothesis forms the basis for selecting the appropriate statistical tests for inferential statistics. A good data analysis plan should summarize the variables as demonstrated in Figure 1 below.

5. Statistical software

There are tons of software packages for data analysis, some common examples are SPSS, Epi Info, SAS, STATA, Microsoft Excel. Include the version number, year of release and author/manufacturer. Beginners have the tendency to try different software and finally not master any. It is rather good to select one and master it because almost all statistical software have the same performance for basic and the majority of advance analysis needed for a student thesis. This is what we recommend to all our students at CRENC before they begin writing their results section .

6. Selecting the appropriate statistical method to test hypotheses

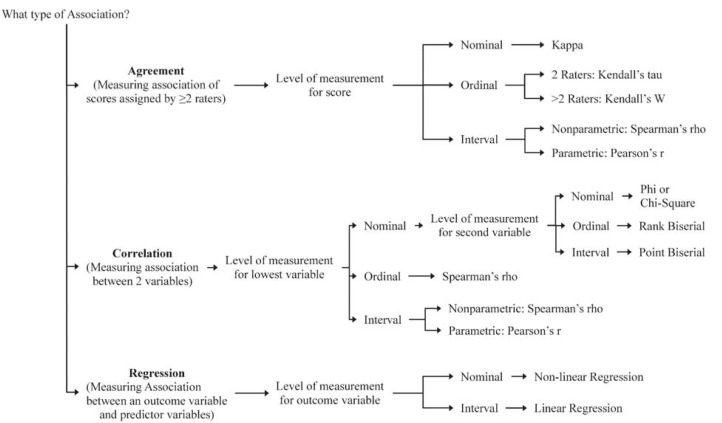

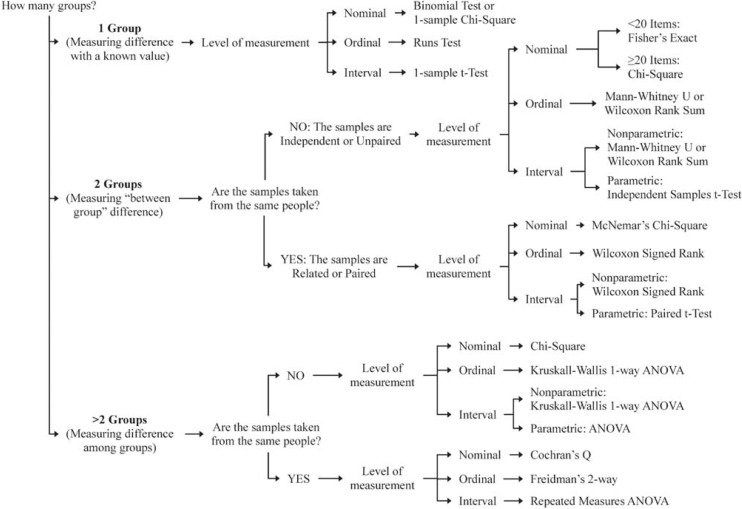

Depending on the research question, hypothesis and type of variable, several statistical methods can be used to answer the research question appropriately. This aspect of the data analysis plan outlines clearly why each statistical method will be used to test hypotheses. The level of statistical significance (p-value) which is often but not always <0.05 should also be written. Presented in figures 2a and 2b are decision trees for some common statistical tests based on the variable type and research question

A good analysis plan should clearly describe how missing data will be analysed.

7. Creating shell tables

Data analysis involves three levels of analysis; univariable, bivariable and multivariable analysis with increasing order of complexity. Shell tables should be created in anticipation for the results that will be obtained from these different levels of analysis. Read our blog article on how to present tables and figures for more details. Suppose you carry out a study to investigate the prevalence and associated factors of a certain disease “X” in a population, then the shell tables can be represented as in Tables 1, Table 2 and Table 3 below.

Table 1: Example of a shell table from univariate analysis

Table 2: Example of a shell table from bivariate analysis

Table 3: Example of a shell table from multivariate analysis

aOR = adjusted odds ratio

Now that you have learned how to create a data analysis plan, these are the takeaway points. It should clearly state the:

- Research question, objectives, and hypotheses

- Dataset to be used

- Variable types and their role

- Statistical software and statistical methods

- Shell tables for univariate, bivariate and multivariate analysis

Further readings

Creating a Data Analysis Plan: What to Consider When Choosing Statistics for a Study https://www.ncbi.nlm.nih.gov/pmc/articles/PMC4552232/pdf/cjhp-68-311.pdf

Creating an Analysis Plan: https://www.cdc.gov/globalhealth/healthprotection/fetp/training_modules/9/creating-analysis-plan_pw_final_09242013.pdf

Data Analysis Plan: https://www.statisticssolutions.com/dissertation-consulting-services/data-analysis-plan-2/

Photo created by freepik – www.freepik.com

Dr Barche is a physician and holds a Masters in Public Health. He is a senior fellow at CRENC with interests in Data Science and Data Analysis.

Post Navigation

16 comments.

Thanks. Quite informative.

Educative write-up. Thanks.

Easy to understand. Thanks Dr

Very explicit Dr. Thanks

I will always remember how you help me conceptualize and understand data science in a simple way. I can only hope that someday I’ll be in a position to repay you, my dear friend.

Plan d’analyse

This is interesting, Thanks

Very understandable and informative. Thank you..

love the figures.

Nice, and informative

This is so much educative and good for beginners, I would love to recommend that you create and share a video because some people are able to grasp when there is an instructor. Lots of love

Thank you Doctor very helpful.

Educative and clearly written. Thanks

Well said doctor,thank you.But when do you present in tables ,bars,pie chart etc?

Very informative guide!

Submit a Comment Cancel Reply

Your email address will not be published. Required fields are marked *

Notify me of follow-up comments by email.

Notify me of new posts by email.

Submit Comment

Receive updates on new courses and blog posts

Never Miss a Thing!

Subscribe to our mailing list to receive the latest news and updates on our webinars, articles and courses.

You have Successfully Subscribed!

An official website of the United States government

Official websites use .gov A .gov website belongs to an official government organization in the United States.

Secure .gov websites use HTTPS A lock ( Lock Locked padlock icon ) or https:// means you've safely connected to the .gov website. Share sensitive information only on official, secure websites.

- Publications

- Account settings

- Advanced Search

- Journal List

Creating a Data Analysis Plan: What to Consider When Choosing Statistics for a Study

Scot h simpson.

- Author information

- Copyright and License information

Address correspondence to: Scot H Simpson, Faculty of Pharmacy and Pharmaceutical Sciences, 3126 Dentistry/Pharmacy, University of Alberta, Edmonton AB T6G 2N8, e-mail: [email protected]

There are three kinds of lies: lies, damned lies, and statistics. – Mark Twain 1

INTRODUCTION

Statistics represent an essential part of a study because, regardless of the study design, investigators need to summarize the collected information for interpretation and presentation to others. It is therefore important for us to heed Mr Twain’s concern when creating the data analysis plan. In fact, even before data collection begins, we need to have a clear analysis plan that will guide us from the initial stages of summarizing and describing the data through to testing our hypotheses.

The purpose of this article is to help you create a data analysis plan for a quantitative study. For those interested in conducting qualitative research, previous articles in this Research Primer series have provided information on the design and analysis of such studies. 2 , 3 Information in the current article is divided into 3 main sections: an overview of terms and concepts used in data analysis, a review of common methods used to summarize study data, and a process to help identify relevant statistical tests. My intention here is to introduce the main elements of data analysis and provide a place for you to start when planning this part of your study. Biostatistical experts, textbooks, statistical software packages, and other resources can certainly add more breadth and depth to this topic when you need additional information and advice.

TERMS AND CONCEPTS USED IN DATA ANALYSIS

When analyzing information from a quantitative study, we are often dealing with numbers; therefore, it is important to begin with an understanding of the source of the numbers. Let us start with the term variable , which defines a specific item of information collected in a study. Examples of variables include age, sex or gender, ethnicity, exercise frequency, weight, treatment group, and blood glucose. Each variable will have a group of categories, which are referred to as values , to help describe the characteristic of an individual study participant. For example, the variable “sex” would have values of “male” and “female”.

Although variables can be defined or grouped in various ways, I will focus on 2 methods at this introductory stage. First, variables can be defined according to the level of measurement. The categories in a nominal variable are names, for example, male and female for the variable “sex”; white, Aboriginal, black, Latin American, South Asian, and East Asian for the variable “ethnicity”; and intervention and control for the variable “treatment group”. Nominal variables with only 2 categories are also referred to as dichotomous variables because the study group can be divided into 2 subgroups based on information in the variable. For example, a study sample can be split into 2 groups (patients receiving the intervention and controls) using the dichotomous variable “treatment group”. An ordinal variable implies that the categories can be placed in a meaningful order, as would be the case for exercise frequency (never, sometimes, often, or always). Nominal-level and ordinal-level variables are also referred to as categorical variables, because each category in the variable can be completely separated from the others. The categories for an interval variable can be placed in a meaningful order, with the interval between consecutive categories also having meaning. Age, weight, and blood glucose can be considered as interval variables, but also as ratio variables, because the ratio between values has meaning (e.g., a 15-year-old is half the age of a 30-year-old). Interval-level and ratio-level variables are also referred to as continuous variables because of the underlying continuity among categories.

As we progress through the levels of measurement from nominal to ratio variables, we gather more information about the study participant. The amount of information that a variable provides will become important in the analysis stage, because we lose information when variables are reduced or aggregated—a common practice that is not recommended. 4 For example, if age is reduced from a ratio-level variable (measured in years) to an ordinal variable (categories of < 65 and ≥ 65 years) we lose the ability to make comparisons across the entire age range and introduce error into the data analysis. 4

A second method of defining variables is to consider them as either dependent or independent. As the terms imply, the value of a dependent variable depends on the value of other variables, whereas the value of an independent variable does not rely on other variables. In addition, an investigator can influence the value of an independent variable, such as treatment-group assignment. Independent variables are also referred to as predictors because we can use information from these variables to predict the value of a dependent variable. Building on the group of variables listed in the first paragraph of this section, blood glucose could be considered a dependent variable, because its value may depend on values of the independent variables age, sex, ethnicity, exercise frequency, weight, and treatment group.

Statistics are mathematical formulae that are used to organize and interpret the information that is collected through variables. There are 2 general categories of statistics, descriptive and inferential. Descriptive statistics are used to describe the collected information, such as the range of values, their average, and the most common category. Knowledge gained from descriptive statistics helps investigators learn more about the study sample. Inferential statistics are used to make comparisons and draw conclusions from the study data. Knowledge gained from inferential statistics allows investigators to make inferences and generalize beyond their study sample to other groups.

Before we move on to specific descriptive and inferential statistics, there are 2 more definitions to review. Parametric statistics are generally used when values in an interval-level or ratio-level variable are normally distributed (i.e., the entire group of values has a bell-shaped curve when plotted by frequency). These statistics are used because we can define parameters of the data, such as the centre and width of the normally distributed curve. In contrast, interval-level and ratio-level variables with values that are not normally distributed, as well as nominal-level and ordinal-level variables, are generally analyzed using nonparametric statistics.

METHODS FOR SUMMARIZING STUDY DATA: DESCRIPTIVE STATISTICS

The first step in a data analysis plan is to describe the data collected in the study. This can be done using figures to give a visual presentation of the data and statistics to generate numeric descriptions of the data.

Selection of an appropriate figure to represent a particular set of data depends on the measurement level of the variable. Data for nominal-level and ordinal-level variables may be interpreted using a pie graph or bar graph . Both options allow us to examine the relative number of participants within each category (by reporting the percentages within each category), whereas a bar graph can also be used to examine absolute numbers. For example, we could create a pie graph to illustrate the proportions of men and women in a study sample and a bar graph to illustrate the number of people who report exercising at each level of frequency (never, sometimes, often, or always).

Interval-level and ratio-level variables may also be interpreted using a pie graph or bar graph; however, these types of variables often have too many categories for such graphs to provide meaningful information. Instead, these variables may be better interpreted using a histogram . Unlike a bar graph, which displays the frequency for each distinct category, a histogram displays the frequency within a range of continuous categories. Information from this type of figure allows us to determine whether the data are normally distributed. In addition to pie graphs, bar graphs, and histograms, many other types of figures are available for the visual representation of data. Interested readers can find additional types of figures in the books recommended in the “Further Readings” section.

Figures are also useful for visualizing comparisons between variables or between subgroups within a variable (for example, the distribution of blood glucose according to sex). Box plots are useful for summarizing information for a variable that does not follow a normal distribution. The lower and upper limits of the box identify the interquartile range (or 25th and 75th percentiles), while the midline indicates the median value (or 50th percentile). Scatter plots provide information on how the categories for one continuous variable relate to categories in a second variable; they are often helpful in the analysis of correlations.

In addition to using figures to present a visual description of the data, investigators can use statistics to provide a numeric description. Regardless of the measurement level, we can find the mode by identifying the most frequent category within a variable. When summarizing nominal-level and ordinal-level variables, the simplest method is to report the proportion of participants within each category.

The choice of the most appropriate descriptive statistic for interval-level and ratio-level variables will depend on how the values are distributed. If the values are normally distributed, we can summarize the information using the parametric statistics of mean and standard deviation. The mean is the arithmetic average of all values within the variable, and the standard deviation tells us how widely the values are dispersed around the mean. When values of interval-level and ratio-level variables are not normally distributed, or we are summarizing information from an ordinal-level variable, it may be more appropriate to use the nonparametric statistics of median and range. The first step in identifying these descriptive statistics is to arrange study participants according to the variable categories from lowest value to highest value. The range is used to report the lowest and highest values. The median or 50th percentile is located by dividing the number of participants into 2 groups, such that half (50%) of the participants have values above the median and the other half (50%) have values below the median. Similarly, the 25th percentile is the value with 25% of the participants having values below and 75% of the participants having values above, and the 75th percentile is the value with 75% of participants having values below and 25% of participants having values above. Together, the 25th and 75th percentiles define the interquartile range .

PROCESS TO IDENTIFY RELEVANT STATISTICAL TESTS: INFERENTIAL STATISTICS

One caveat about the information provided in this section: selecting the most appropriate inferential statistic for a specific study should be a combination of following these suggestions, seeking advice from experts, and discussing with your co-investigators. My intention here is to give you a place to start a conversation with your colleagues about the options available as you develop your data analysis plan.

There are 3 key questions to consider when selecting an appropriate inferential statistic for a study: What is the research question? What is the study design? and What is the level of measurement? It is important for investigators to carefully consider these questions when developing the study protocol and creating the analysis plan. The figures that accompany these questions show decision trees that will help you to narrow down the list of inferential statistics that would be relevant to a particular study. Appendix 1 provides brief definitions of the inferential statistics named in these figures. Additional information, such as the formulae for various inferential statistics, can be obtained from textbooks, statistical software packages, and biostatisticians.

What Is the Research Question?

The first step in identifying relevant inferential statistics for a study is to consider the type of research question being asked. You can find more details about the different types of research questions in a previous article in this Research Primer series that covered questions and hypotheses. 5 A relational question seeks information about the relationship among variables; in this situation, investigators will be interested in determining whether there is an association ( Figure 1 ). A causal question seeks information about the effect of an intervention on an outcome; in this situation, the investigator will be interested in determining whether there is a difference ( Figure 2 ).

Decision tree to identify inferential statistics for an association.

Decision tree to identify inferential statistics for measuring a difference.

What Is the Study Design?

When considering a question of association, investigators will be interested in measuring the relationship between variables ( Figure 1 ). A study designed to determine whether there is consensus among different raters will be measuring agreement. For example, an investigator may be interested in determining whether 2 raters, using the same assessment tool, arrive at the same score. Correlation analyses examine the strength of a relationship or connection between 2 variables, like age and blood glucose. Regression analyses also examine the strength of a relationship or connection; however, in this type of analysis, one variable is considered an outcome (or dependent variable) and the other variable is considered a predictor (or independent variable). Regression analyses often consider the influence of multiple predictors on an outcome at the same time. For example, an investigator may be interested in examining the association between a treatment and blood glucose, while also considering other factors, like age, sex, ethnicity, exercise frequency, and weight.

When considering a question of difference, investigators must first determine how many groups they will be comparing. In some cases, investigators may be interested in comparing the characteristic of one group with that of an external reference group. For example, is the mean age of study participants similar to the mean age of all people in the target group? If more than one group is involved, then investigators must also determine whether there is an underlying connection between the sets of values (or samples ) to be compared. Samples are considered independent or unpaired when the information is taken from different groups. For example, we could use an unpaired t test to compare the mean age between 2 independent samples, such as the intervention and control groups in a study. Samples are considered related or paired if the information is taken from the same group of people, for example, measurement of blood glucose at the beginning and end of a study. Because blood glucose is measured in the same people at both time points, we could use a paired t test to determine whether there has been a significant change in blood glucose.

What Is the Level of Measurement?

As described in the first section of this article, variables can be grouped according to the level of measurement (nominal, ordinal, or interval). In most cases, the independent variable in an inferential statistic will be nominal; therefore, investigators need to know the level of measurement for the dependent variable before they can select the relevant inferential statistic. Two exceptions to this consideration are correlation analyses and regression analyses ( Figure 1 ). Because a correlation analysis measures the strength of association between 2 variables, we need to consider the level of measurement for both variables. Regression analyses can consider multiple independent variables, often with a variety of measurement levels. However, for these analyses, investigators still need to consider the level of measurement for the dependent variable.

Selection of inferential statistics to test interval-level variables must include consideration of how the data are distributed. An underlying assumption for parametric tests is that the data approximate a normal distribution. When the data are not normally distributed, information derived from a parametric test may be wrong. 6 When the assumption of normality is violated (for example, when the data are skewed), then investigators should use a nonparametric test. If the data are normally distributed, then investigators can use a parametric test.

ADDITIONAL CONSIDERATIONS

What is the level of significance.

An inferential statistic is used to calculate a p value, the probability of obtaining the observed data by chance. Investigators can then compare this p value against a prespecified level of significance, which is often chosen to be 0.05. This level of significance represents a 1 in 20 chance that the observation is wrong, which is considered an acceptable level of error.

What Are the Most Commonly Used Statistics?

In 1983, Emerson and Colditz 7 reported the first review of statistics used in original research articles published in the New England Journal of Medicine . This review of statistics used in the journal was updated in 1989 and 2005, 8 and this type of analysis has been replicated in many other journals. 9 – 13 Collectively, these reviews have identified 2 important observations. First, the overall sophistication of statistical methodology used and reported in studies has grown over time, with survival analyses and multivariable regression analyses becoming much more common. The second observation is that, despite this trend, 1 in 4 articles describe no statistical methods or report only simple descriptive statistics. When inferential statistics are used, the most common are t tests, contingency table tests (for example, χ 2 test and Fisher exact test), and simple correlation and regression analyses. This information is important for educators, investigators, reviewers, and readers because it suggests that a good foundational knowledge of descriptive statistics and common inferential statistics will enable us to correctly evaluate the majority of research articles. 11 – 13 However, to fully take advantage of all research published in high-impact journals, we need to become acquainted with some of the more complex methods, such as multivariable regression analyses. 8 , 13

What Are Some Additional Resources?

As an investigator and Associate Editor with CJHP , I have often relied on the advice of colleagues to help create my own analysis plans and review the plans of others. Biostatisticians have a wealth of knowledge in the field of statistical analysis and can provide advice on the correct selection, application, and interpretation of these methods. Colleagues who have “been there and done that” with their own data analysis plans are also valuable sources of information. Identify these individuals and consult with them early and often as you develop your analysis plan.

Another important resource to consider when creating your analysis plan is textbooks. Numerous statistical textbooks are available, differing in levels of complexity and scope. The titles listed in the “Further Reading” section are just a few suggestions. I encourage interested readers to look through these and other books to find resources that best fit their needs. However, one crucial book that I highly recommend to anyone wanting to be an investigator or peer reviewer is Lang and Secic’s How to Report Statistics in Medicine (see “Further Reading”). As the title implies, this book covers a wide range of statistics used in medical research and provides numerous examples of how to correctly report the results.

CONCLUSIONS

When it comes to creating an analysis plan for your project, I recommend following the sage advice of Douglas Adams in The Hitchhiker’s Guide to the Galaxy : Don’t panic! 14 Begin with simple methods to summarize and visualize your data, then use the key questions and decision trees provided in this article to identify relevant statistical tests. Information in this article will give you and your co-investigators a place to start discussing the elements necessary for developing an analysis plan. But do not stop there! Use advice from biostatisticians and more experienced colleagues, as well as information in textbooks, to help create your analysis plan and choose the most appropriate statistics for your study. Making careful, informed decisions about the statistics to use in your study should reduce the risk of confirming Mr Twain’s concern.

Appendix 1. Glossary of statistical terms * (part 1 of 2)

- 1-way ANOVA: Uses 1 variable to define the groups for comparing means. This is similar to the Student t test when comparing the means of 2 groups.

- Kruskall–Wallis 1-way ANOVA: Nonparametric alternative for the 1-way ANOVA. Used to determine the difference in medians between 3 or more groups.

- n -way ANOVA: Uses 2 or more variables to define groups when comparing means. Also called a “between-subjects factorial ANOVA”.

- Repeated-measures ANOVA: A method for analyzing whether the means of 3 or more measures from the same group of participants are different.

- Freidman ANOVA: Nonparametric alternative for the repeated-measures ANOVA. It is often used to compare rankings and preferences that are measured 3 or more times.

Used to determine whether the observed proportion is significantly different from a known or hypothesized proportion. The variable is dichotomous (nominal-level data with 2 options).

Correlation technique when one of the variables is dichotomous (or measured at the nominal level).

- Fisher exact: Variation of chi-square that accounts for cell counts < 5.

- McNemar: Variation of chi-square that tests statistical significance of changes in 2 paired measurements of dichotomous variables.

- Cochran Q: An extension of the McNemar test that provides a method for testing for differences between 3 or more matched sets of frequencies or proportions. Often used as a measure of heterogeneity in meta-analyses.

Numeric or graphic summaries (or descriptions) of a variable.

Measures the difference between 2 variables or subgroups of a variable. Allows the investigator to make inferences about another group on the basis of information generated from the study data.

Measures the degree of nonrandom agreement between observers or measurements for the same nominal-level variable.

Nonparametric alternative for the Spearman correlation. Used when measuring the relationship between 2 ranked (or ordinal-level data) variables.

Nonparametric alternative for the independent t test. One variable is dichotomous (e.g., group A versus group B) and the other variable is either ordinal or interval.

Parametric test used to determine whether an association exists between 2 variables measured at the interval or ratio level.

Used when both variables in a correlation analysis are dichotomous.

Used to determine whether a series of data occurs from a random process.

Nonparametric alternative for the Pearson correlation coefficient. Used when the assumptions for Pearson correlation are violated (e.g., data are not normally distributed) or one of the variables is measured at the ordinal level.

- 1-sample: Used to determine whether the mean of a sample is significantly different from a known or hypothesized value.

- Independent-samples t test (also referred to as the Student t test): Used when the independent variable is a nominal-level variable that identifies 2 groups and the dependent variable is an interval-level variable.

- Paired: Used to compare 2 pairs of scores between 2 groups (e.g., baseline and follow-up blood pressure in the intervention and control groups).

Nonparametric alternative to the independent t test based solely on the order in which observations from the 2 samples fall. Similar to the Mann–Whitney U test.

Nonparametric alternative to the paired t test. The differences between matched pairs are computed and ranked. This test compares the sum of the negative differences and the sum of the positive differences.

Lang TA, Secic M. How to report statistics in medicine: annotated guidelines for authors, editors, and reviewers. 2nd ed. Philadelphia (PA): American College of Physicians; 2006.

Norman GR, Streiner DL. PDQ statistics. 3rd ed. Hamilton (ON): B.C. Decker; 2003.

Plichta SB, Kelvin E. Munro’s statistical methods for health care research . 6th ed. Philadelphia (PA): Wolters Kluwer Health/ Lippincott, Williams & Wilkins; 2013.

This article is the 12th in the CJHP Research Primer Series, an initiative of the CJHP Editorial Board and the CSHP Research Committee. The planned 2-year series is intended to appeal to relatively inexperienced researchers, with the goal of building research capacity among practising pharmacists. The articles, presenting simple but rigorous guidance to encourage and support novice researchers, are being solicited from authors with appropriate expertise.

Previous articles in this series:

Bond CM. The research jigsaw: how to get started. Can J Hosp Pharm . 2014;67(1):28–30.

Tully MP. Research: articulating questions, generating hypotheses, and choosing study designs. Can J Hosp Pharm . 2014;67(1):31–4.

Loewen P. Ethical issues in pharmacy practice research: an introductory guide. Can J Hosp Pharm. 2014;67(2):133–7.

Tsuyuki RT. Designing pharmacy practice research trials. Can J Hosp Pharm . 2014;67(3):226–9.

Bresee LC. An introduction to developing surveys for pharmacy practice research. Can J Hosp Pharm . 2014;67(4):286–91.

Gamble JM. An introduction to the fundamentals of cohort and case–control studies. Can J Hosp Pharm . 2014;67(5):366–72.

Austin Z, Sutton J. Qualitative research: getting started. C an J Hosp Pharm . 2014;67(6):436–40.

Houle S. An introduction to the fundamentals of randomized controlled trials in pharmacy research. Can J Hosp Pharm . 2014; 68(1):28–32.

Charrois TL. Systematic reviews: What do you need to know to get started? Can J Hosp Pharm . 2014;68(2):144–8.

Sutton J, Austin Z. Qualitative research: data collection, analysis, and management. Can J Hosp Pharm . 2014;68(3):226–31.

Cadarette SM, Wong L. An introduction to health care administrative data. Can J Hosp Pharm. 2014;68(3):232–7.

Competing interests: None declared.

- 1. Twain M. In: Mark Twain’s own autobiography: the chapters from the North American review. 2nd ed. Kiskis MJ, editor. Madison (WI): University of Wisconsin Press; 2010. p. 318. [ Google Scholar ]

- 2. Austin Z, Sutton J. Qualitative research: getting started. Can J Hosp Pharm. 2014;67(6):436–40. doi: 10.4212/cjhp.v67i6.1406. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 3. Sutton J, Austin Z. Qualitative research: data collection, analysis, and management. Can J Hosp Pharm. 2015;68(3):226–31. doi: 10.4212/cjhp.v68i3.1456. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 4. Dawson NV, Weiss R. Dichotomizing continuous variables in statistical analysis: a practice to avoid. Med Decis Making. 2012;32(2):225–6. doi: 10.1177/0272989X12437605. [ DOI ] [ PubMed ] [ Google Scholar ]

- 5. Tully MP. Research: articulating questions, generating hypotheses, and choosing study designs. Can J Hosp Pharm. 2014;67(1):31–4. doi: 10.4212/cjhp.v67i1.1320. [ DOI ] [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 6. Harwell MR. Choosing between parametric and nonparametric tests. J Couns Dev. 1988;67(1):35–8. doi: 10.1002/j.1556-6676.1988.tb02007.x. [ DOI ] [ Google Scholar ]

- 7. Emerson JD, Colditz GA. Use of statistical analysis in the New England Journal of Medicine. N Engl J Med. 1983;309(12):709–13. doi: 10.1056/NEJM198309223091206. [ DOI ] [ PubMed ] [ Google Scholar ]

- 8. Horton NJ, Switzer SS. Statistical methods in the journal. N Engl J Med. 2005;353(18):1977–9. doi: 10.1056/NEJM200511033531823. [ DOI ] [ PubMed ] [ Google Scholar ]

- 9. Guyatt G, Jaeschke R, Heddle N, Cook D, Shannon H, Walter S. Basic statistics for clinicians: 1. Hypothesis testing. CMAJ. 1995;152(1):27–32. [ PMC free article ] [ PubMed ] [ Google Scholar ]

- 10. Goldin J, Zhu W, Sayre JW. A review of the statistical analysis used in papers published in Clinical Radiology and British Journal of Radiology. Clin Radiol. 1996;51(1):47–50. doi: 10.1016/S0009-9260(96)80219-4. [ DOI ] [ PubMed ] [ Google Scholar ]

- 11. Reed JF, 3rd, Salen P, Bagher P. Methodological and statistical techniques: what do residents really need to know about statistics? J Med Syst. 2003;27(3):233–8. doi: 10.1023/A:1022519227039. [ DOI ] [ PubMed ] [ Google Scholar ]

- 12. Hellems MA, Gurka MJ, Hayden GF. Statistical literacy for readers of Pediatrics: a moving target. Pediatrics. 2007;119(6):1083–8. doi: 10.1542/peds.2006-2330. [ DOI ] [ PubMed ] [ Google Scholar ]

- 13. Taback N, Krzyzanowska MK. A survey of abstracts of high-impact clinical journals indicated most statistical methods presented are summary statistics. J Clin Epidemiol. 2008;61(3):277–81. doi: 10.1016/j.jclinepi.2007.05.003. [ DOI ] [ PubMed ] [ Google Scholar ]

- 14. Adams D. The hitchhiker’s guide to the galaxy. London (UK): Pan Books; 1979. [ Google Scholar ]

Further Reading

- Devor J, Peck R. Statistics: the exploration and analysis of data. 7th ed. Boston (MA): Brooks/Cole Cengage Learning; 2012. [ Google Scholar ]

- Lang TA, Secic M. How to report statistics in medicine: annotated guidelines for authors, editors, and reviewers. 2nd ed. Philadelphia (PA): American College of Physicians; 2006. [ Google Scholar ]

- Mendenhall W, Beaver RJ, Beaver BM. Introduction to probability and statistics. 13th ed. Belmont (CA): Brooks/Cole Cengage Learning; 2009. [ Google Scholar ]

- Norman GR, Streiner DL. PDQ statistics. 3rd ed. Hamilton (ON): B.C. Decker; 2003. [ Google Scholar ]

- Plichta SB, Kelvin E. Munro’s statistical methods for health care research. 6th ed. Philadelphia (PA): Wolters Kluwer Health/Lippincott, Williams & Wilkins; 2013. [ Google Scholar ]

- View on publisher site

- PDF (201.0 KB)

- Collections

Similar articles

Cited by other articles, links to ncbi databases.

- Download .nbib .nbib

- Format: AMA APA MLA NLM

Add to Collections

Data analysis write-ups

What should a data-analysis write-up look like.

Writing up the results of a data analysis is not a skill that anyone is born with. It requires practice and, at least in the beginning, a bit of guidance.

Organization

When writing your report, organization will set you free. A good outline is: 1) overview of the problem, 2) your data and modeling approach, 3) the results of your data analysis (plots, numbers, etc), and 4) your substantive conclusions.

1) Overview Describe the problem. What substantive question are you trying to address? This needn’t be long, but it should be clear.

2) Data and model What data did you use to address the question, and how did you do it? When describing your approach, be specific. For example:

- Don’t say, “I ran a regression” when you instead can say, “I fit a linear regression model to predict price that included a house’s size and neighborhood as predictors.”

- Justify important features of your modeling approach. For example: “Neighborhood was included as a categorical predictor in the model because Figure 2 indicated clear differences in price across the neighborhoods.”

Sometimes your Data and Model section will contain plots or tables, and sometimes it won’t. If you feel that a plot helps the reader understand the problem or data set itself—as opposed to your results—then go ahead and include it. A great example here is Tables 1 and 2 in the main paper on the PREDIMED study . These tables help the reader understand some important properties of the data and approach, but not the results of the study itself.

3) Results In your results section, include any figures and tables necessary to make your case. Label them (Figure 1, 2, etc), give them informative captions, and refer to them in the text by their numbered labels where you discuss them. Typical things to include here may include: pictures of the data; pictures and tables that show the fitted model; tables of model coefficients and summaries.

4) Conclusion What did you learn from the analysis? What is the answer, if any, to the question you set out to address?

General advice

Make the sections as short or long as they need to be. For example, a conclusions section is often pretty short, while a results section is usually a bit longer.

It’s OK to use the first person to avoid awkward or bizarre sentence constructions, but try to do so sparingly.

Do not include computer code unless explicitly called for. Note: model outputs do not count as computer code. Outputs should be used as evidence in your results section (ideally formatted in a nice way). By code, I mean the sequence of commands you used to process the data and produce the outputs.

When in doubt, use shorter words and sentences.

A very common way for reports to go wrong is when the writer simply narrates the thought process he or she followed: :First I did this, but it didn’t work. Then I did something else, and I found A, B, and C. I wasn’t really sure what to make of B, but C was interesting, so I followed up with D and E. Then having done this…” Do not do this. The desire for specificity is admirable, but the overall effect is one of amateurism. Follow the recommended outline above.

Here’s a good example of a write-up for an analysis of a few relatively simple problems. Because the problems are so straightforward, there’s not much of a need for an outline of the kind described above. Nonetheless, the spirit of these guidelines is clearly in evidence. Notice the clear exposition, the labeled figures and tables that are referred to in the text, and the careful integration of visual and numerical evidence into the overall argument. This is one worth emulating.

Writing the Data Analysis Plan

- First Online: 01 January 2010

Cite this chapter

- A. T. Panter 4

5938 Accesses

3 Altmetric

You and your project statistician have one major goal for your data analysis plan: You need to convince all the reviewers reading your proposal that you would know what to do with your data once your project is funded and your data are in hand. The data analytic plan is a signal to the reviewers about your ability to score, describe, and thoughtfully synthesize a large number of variables into appropriately-selected quantitative models once the data are collected. Reviewers respond very well to plans with a clear elucidation of the data analysis steps – in an appropriate order, with an appropriate level of detail and reference to relevant literatures, and with statistical models and methods for that map well into your proposed aims. A successful data analysis plan produces reviews that either include no comments about the data analysis plan or better yet, compliments it for being comprehensive and logical given your aims. This chapter offers practical advice about developing and writing a compelling, “bullet-proof” data analytic plan for your grant application.

This is a preview of subscription content, log in via an institution to check access.

Access this chapter

Subscribe and save.

- Get 10 units per month

- Download Article/Chapter or eBook

- 1 Unit = 1 Article or 1 Chapter

- Cancel anytime

- Available as PDF

- Read on any device

- Instant download

- Own it forever

- Available as EPUB and PDF

- Compact, lightweight edition

- Dispatched in 3 to 5 business days

- Free shipping worldwide - see info

Tax calculation will be finalised at checkout

Purchases are for personal use only

Institutional subscriptions

Similar content being viewed by others

A Holistic Approach to Empirical Analysis: The Insignificance of P, Hypothesis Testing and Statistical Significance*

Meta-Analysis

Researchers’ data analysis choices: an excess of false positives?

Aiken, L. S. & West, S. G. (1991). Multiple regression: testing and interpreting interactions . Newbury Park, CA: Sage.

Google Scholar

Aiken, L. S., West, S. G., & Millsap, R. E. (2008). Doctoral training in statistics, measurement, and methodology in psychology: Replication and extension of Aiken, West, Sechrest and Reno’s (1990) survey of PhD programs in North America. American Psychologist , 63 , 32–50.

Article PubMed Google Scholar

Allison, P. D. (2003). Missing data techniques for structural equation modeling. Journal of Abnormal Psychology , 112 , 545–557.

American Psychological Association (APA) Task Force to Increase the Quantitative Pipeline (2009). Report of the task force to increase the quantitative pipeline . Washington, DC: American Psychological Association.

Bauer, D. & Curran, P. J. (2004). The integration of continuous and discrete latent variables: Potential problems and promising opportunities. Psychological Methods , 9 , 3–29.

Bollen, K. A. (1989). Structural equations with latent variables . New York: Wiley.

Bollen, K. A. & Curran, P. J. (2007). Latent curve models: A structural equation modeling approach . New York: Wiley.

Cohen, J., Cohen, P., West, S. G., & Aiken, L. S. (2003). Multiple correlation/regression for the behavioral sciences (3rd ed.). Mahwah, NJ: Erlbaum.

Curran, P. J., Bauer, D. J., & Willoughby, M. T. (2004). Testing main effects and interactions in hierarchical linear growth models. Psychological Methods , 9 , 220–237.

Embretson, S. E. & Reise, S. P. (2000). Item response theory for psychologists . Mahwah, NJ: Erlbaum.

Enders, C. K. (2006). Analyzing structural equation models with missing data. In G. R. Hancock & R. O. Mueller (Eds.), Structural equation modeling: A second course (pp. 313–342). Greenwich, CT: Information Age.

Hosmer, D. & Lemeshow, S. (1989). Applied logistic regression . New York: Wiley.

Hoyle, R. H. & Panter, A. T. (1995). Writing about structural equation models. In R. H. Hoyle (Ed.), Structural equation modeling: Concepts, issues, and applications (pp. 158–176). Thousand Oaks: Sage.

Kaplan, D. & Elliott, P. R. (1997). A didactic example of multilevel structural equation modeling applicable to the study of organizations. Structural Equation Modeling , 4 , 1–23.

Article Google Scholar

Lanza, S. T., Collins, L. M., Schafer, J. L., & Flaherty, B. P. (2005). Using data augmentation to obtain standard errors and conduct hypothesis tests in latent class and latent transition analysis. Psychological Methods , 10 , 84–100.

MacKinnon, D. P. (2008). Introduction to statistical mediation analysis . Mahwah, NJ: Erlbaum.

Maxwell, S. E. (2004). The persistence of underpowered studies in psychological research: Causes, consequences, and remedies. Psychological Methods , 9 , 147–163.

McCullagh, P. & Nelder, J. (1989). Generalized linear models . London: Chapman and Hall.

McDonald, R. P. & Ho, M. R. (2002). Principles and practices in reporting structural equation modeling analyses. Psychological Methods , 7 , 64–82.

Messick, S. (1989). Validity. In R. L. Linn (Ed.), Educational measurement (3rd ed., pp. 13–103). New York: Macmillan.

Muthén, B. O. (1994). Multilevel covariance structure analysis. Sociological Methods & Research , 22 , 376–398.

Muthén, B. (2008). Latent variable hybrids: overview of old and new models. In G. R. Hancock & K. M. Samuelsen (Eds.), Advances in latent variable mixture models (pp. 1–24). Charlotte, NC: Information Age.

Muthén, B. & Masyn, K. (2004). Discrete-time survival mixture analysis. Journal of Educational and Behavioral Statistics , 30 , 27–58.

Muthén, L. K. & Muthén, B. O. (2004). Mplus, statistical analysis with latent variables: User’s guide . Los Angeles, CA: Muthén &Muthén.

Peugh, J. L. & Enders, C. K. (2004). Missing data in educational research: a review of reporting practices and suggestions for improvement. Review of Educational Research , 74 , 525–556.

Preacher, K. J., Curran, P. J., & Bauer, D. J. (2006). Computational tools for probing interaction effects in multiple linear regression, multilevel modeling, and latent curve analysis. Journal of Educational and Behavioral Statistics , 31 , 437–448.

Preacher, K. J., Curran, P. J., & Bauer, D. J. (2003, September). Probing interactions in multiple linear regression, latent curve analysis, and hierarchical linear modeling: Interactive calculation tools for establishing simple intercepts, simple slopes, and regions of significance [Computer software]. Available from http://www.quantpsy.org .

Preacher, K. J., Rucker, D. D., & Hayes, A. F. (2007). Addressing moderated mediation hypotheses: Theory, methods, and prescriptions. Multivariate Behavioral Research , 42 , 185–227.

Raudenbush, S. W. & Bryk, A. S. (2002). Hierarchical linear models: Applications and data analysis methods (2nd ed.). Thousand Oaks, CA: Sage.

Radloff, L. (1977). The CES-D scale: A self-report depression scale for research in the general population. Applied Psychological Measurement , 1 , 385–401.

Rosenberg, M. (1965). Society and the adolescent self-image . Princeton, NJ: Princeton University Press.

Schafer. J. L. & Graham, J. W. (2002). Missing data: Our view of the state of the art. Psychological Methods , 7 , 147–177.

Schumacker, R. E. (2002). Latent variable interaction modeling. Structural Equation Modeling , 9 , 40–54.

Schumacker, R. E. & Lomax, R. G. (2004). A beginner’s guide to structural equation modeling . Mahwah, NJ: Erlbaum.

Selig, J. P. & Preacher, K. J. (2008, June). Monte Carlo method for assessing mediation: An interactive tool for creating confidence intervals for indirect effects [Computer software]. Available from http://www.quantpsy.org .

Singer, J. D. & Willett, J. B. (1991). Modeling the days of our lives: Using survival analysis when designing and analyzing longitudinal studies of duration and the timing of events. Psychological Bulletin , 110 , 268–290.

Singer, J. D. & Willett, J. B. (1993). It’s about time: Using discrete-time survival analysis to study duration and the timing of events. Journal of Educational Statistics , 18 , 155–195.

Singer, J. D. & Willett, J. B. (2003). Applied longitudinal data analysis: Modeling change and event occurrence . New York: Oxford University.

Book Google Scholar

Vandenberg, R. J. & Lance, C. E. (2000). A review and synthesis of the measurement invariance literature: Suggestions, practices, and recommendations for organizational research. Organizational Research Methods , 3 , 4–69.

Wirth, R. J. & Edwards, M. C. (2007). Item factor analysis: Current approaches and future directions. Psychological Methods , 12 , 58–79.

Article PubMed CAS Google Scholar

Download references

Author information

Authors and affiliations.

L. L. Thurstone Psychometric Laboratory, Department of Psychology, University of North Carolina, Chapel Hill, NC, USA

A. T. Panter

You can also search for this author in PubMed Google Scholar

Corresponding author

Correspondence to A. T. Panter .

Editor information

Editors and affiliations.

National Institute of Mental Health, Executive Blvd. 6001, Bethesda, 20892-9641, Maryland, USA

Willo Pequegnat

Ellen Stover

Delafield Place, N.W. 1413, Washington, 20011, District of Columbia, USA

Cheryl Anne Boyce

Rights and permissions

Reprints and permissions

Copyright information

© 2010 Springer Science+Business Media, LLC

About this chapter

Panter, A.T. (2010). Writing the Data Analysis Plan. In: Pequegnat, W., Stover, E., Boyce, C. (eds) How to Write a Successful Research Grant Application. Springer, Boston, MA. https://doi.org/10.1007/978-1-4419-1454-5_22

Download citation

DOI : https://doi.org/10.1007/978-1-4419-1454-5_22

Published : 20 August 2010

Publisher Name : Springer, Boston, MA

Print ISBN : 978-1-4419-1453-8

Online ISBN : 978-1-4419-1454-5

eBook Packages : Medicine Medicine (R0)

Share this chapter

Anyone you share the following link with will be able to read this content:

Sorry, a shareable link is not currently available for this article.

Provided by the Springer Nature SharedIt content-sharing initiative

- Publish with us

Policies and ethics

- Find a journal

- Track your research

A Step-by-Step Guide to the Data Analysis Process

Like any scientific discipline, data analysis follows a rigorous step-by-step process. Each stage requires different skills and know-how. To get meaningful insights, though, it’s important to understand the process as a whole. An underlying framework is invaluable for producing results that stand up to scrutiny.

In this post, we’ll explore the main steps in the data analysis process. This will cover how to define your goal, collect data, and carry out an analysis. Where applicable, we’ll also use examples and highlight a few tools to make the journey easier. When you’re done, you’ll have a much better understanding of the basics. This will help you tweak the process to fit your own needs.

Here are the steps we’ll take you through:

- Defining the question

- Collecting the data

- Cleaning the data

- Analyzing the data

- Sharing your results

- Embracing failure

On popular request, we’ve also developed a video based on this article. Scroll further along this article to watch that.

Ready? Let’s get started with step one.

1. Step one: Defining the question

The first step in any data analysis process is to define your objective. In data analytics jargon, this is sometimes called the ‘problem statement’.

Defining your objective means coming up with a hypothesis and figuring how to test it. Start by asking: What business problem am I trying to solve? While this might sound straightforward, it can be trickier than it seems. For instance, your organization’s senior management might pose an issue, such as: “Why are we losing customers?” It’s possible, though, that this doesn’t get to the core of the problem. A data analyst’s job is to understand the business and its goals in enough depth that they can frame the problem the right way.

Let’s say you work for a fictional company called TopNotch Learning. TopNotch creates custom training software for its clients. While it is excellent at securing new clients, it has much lower repeat business. As such, your question might not be, “Why are we losing customers?” but, “Which factors are negatively impacting the customer experience?” or better yet: “How can we boost customer retention while minimizing costs?”

Now you’ve defined a problem, you need to determine which sources of data will best help you solve it. This is where your business acumen comes in again. For instance, perhaps you’ve noticed that the sales process for new clients is very slick, but that the production team is inefficient. Knowing this, you could hypothesize that the sales process wins lots of new clients, but the subsequent customer experience is lacking. Could this be why customers don’t come back? Which sources of data will help you answer this question?

Tools to help define your objective

Defining your objective is mostly about soft skills, business knowledge, and lateral thinking. But you’ll also need to keep track of business metrics and key performance indicators (KPIs). Monthly reports can allow you to track problem points in the business. Some KPI dashboards come with a fee, like Databox and DashThis . However, you’ll also find open-source software like Grafana , Freeboard , and Dashbuilder . These are great for producing simple dashboards, both at the beginning and the end of the data analysis process.

2. Step two: Collecting the data

Once you’ve established your objective, you’ll need to create a strategy for collecting and aggregating the appropriate data. A key part of this is determining which data you need. This might be quantitative (numeric) data, e.g. sales figures, or qualitative (descriptive) data, such as customer reviews. All data fit into one of three categories: first-party, second-party, and third-party data. Let’s explore each one.

What is first-party data?

First-party data are data that you, or your company, have directly collected from customers. It might come in the form of transactional tracking data or information from your company’s customer relationship management (CRM) system. Whatever its source, first-party data is usually structured and organized in a clear, defined way. Other sources of first-party data might include customer satisfaction surveys, focus groups, interviews, or direct observation.

What is second-party data?

To enrich your analysis, you might want to secure a secondary data source. Second-party data is the first-party data of other organizations. This might be available directly from the company or through a private marketplace. The main benefit of second-party data is that they are usually structured, and although they will be less relevant than first-party data, they also tend to be quite reliable. Examples of second-party data include website, app or social media activity, like online purchase histories, or shipping data.

What is third-party data?

Third-party data is data that has been collected and aggregated from numerous sources by a third-party organization. Often (though not always) third-party data contains a vast amount of unstructured data points (big data). Many organizations collect big data to create industry reports or to conduct market research. The research and advisory firm Gartner is a good real-world example of an organization that collects big data and sells it on to other companies. Open data repositories and government portals are also sources of third-party data .

Tools to help you collect data

Once you’ve devised a data strategy (i.e. you’ve identified which data you need, and how best to go about collecting them) there are many tools you can use to help you. One thing you’ll need, regardless of industry or area of expertise, is a data management platform (DMP). A DMP is a piece of software that allows you to identify and aggregate data from numerous sources, before manipulating them, segmenting them, and so on. There are many DMPs available. Some well-known enterprise DMPs include Salesforce DMP , SAS , and the data integration platform, Xplenty . If you want to play around, you can also try some open-source platforms like Pimcore or D:Swarm .

Want to learn more about what data analytics is and the process a data analyst follows? We cover this topic (and more) in our free introductory short course for beginners. Check out tutorial one: An introduction to data analytics .

3. Step three: Cleaning the data

Once you’ve collected your data, the next step is to get it ready for analysis. This means cleaning, or ‘scrubbing’ it, and is crucial in making sure that you’re working with high-quality data . Key data cleaning tasks include:

- Removing major errors, duplicates, and outliers —all of which are inevitable problems when aggregating data from numerous sources.

- Removing unwanted data points —extracting irrelevant observations that have no bearing on your intended analysis.

- Bringing structure to your data —general ‘housekeeping’, i.e. fixing typos or layout issues, which will help you map and manipulate your data more easily.

- Filling in major gaps —as you’re tidying up, you might notice that important data are missing. Once you’ve identified gaps, you can go about filling them.

A good data analyst will spend around 70-90% of their time cleaning their data. This might sound excessive. But focusing on the wrong data points (or analyzing erroneous data) will severely impact your results. It might even send you back to square one…so don’t rush it! You’ll find a step-by-step guide to data cleaning here . You may be interested in this introductory tutorial to data cleaning, hosted by Dr. Humera Noor Minhas.

Carrying out an exploratory analysis

Another thing many data analysts do (alongside cleaning data) is to carry out an exploratory analysis. This helps identify initial trends and characteristics, and can even refine your hypothesis. Let’s use our fictional learning company as an example again. Carrying out an exploratory analysis, perhaps you notice a correlation between how much TopNotch Learning’s clients pay and how quickly they move on to new suppliers. This might suggest that a low-quality customer experience (the assumption in your initial hypothesis) is actually less of an issue than cost. You might, therefore, take this into account.

Tools to help you clean your data

Cleaning datasets manually—especially large ones—can be daunting. Luckily, there are many tools available to streamline the process. Open-source tools, such as OpenRefine , are excellent for basic data cleaning, as well as high-level exploration. However, free tools offer limited functionality for very large datasets. Python libraries (e.g. Pandas) and some R packages are better suited for heavy data scrubbing. You will, of course, need to be familiar with the languages. Alternatively, enterprise tools are also available. For example, Data Ladder , which is one of the highest-rated data-matching tools in the industry. There are many more. Why not see which free data cleaning tools you can find to play around with?

4. Step four: Analyzing the data

Finally, you’ve cleaned your data. Now comes the fun bit—analyzing it! The type of data analysis you carry out largely depends on what your goal is. But there are many techniques available. Univariate or bivariate analysis, time-series analysis, and regression analysis are just a few you might have heard of. More important than the different types, though, is how you apply them. This depends on what insights you’re hoping to gain. Broadly speaking, all types of data analysis fit into one of the following four categories.

Descriptive analysis

Descriptive analysis identifies what has already happened . It is a common first step that companies carry out before proceeding with deeper explorations. As an example, let’s refer back to our fictional learning provider once more. TopNotch Learning might use descriptive analytics to analyze course completion rates for their customers. Or they might identify how many users access their products during a particular period. Perhaps they’ll use it to measure sales figures over the last five years. While the company might not draw firm conclusions from any of these insights, summarizing and describing the data will help them to determine how to proceed.

Learn more: What is descriptive analytics?

Diagnostic analysis

Diagnostic analytics focuses on understanding why something has happened . It is literally the diagnosis of a problem, just as a doctor uses a patient’s symptoms to diagnose a disease. Remember TopNotch Learning’s business problem? ‘Which factors are negatively impacting the customer experience?’ A diagnostic analysis would help answer this. For instance, it could help the company draw correlations between the issue (struggling to gain repeat business) and factors that might be causing it (e.g. project costs, speed of delivery, customer sector, etc.) Let’s imagine that, using diagnostic analytics, TopNotch realizes its clients in the retail sector are departing at a faster rate than other clients. This might suggest that they’re losing customers because they lack expertise in this sector. And that’s a useful insight!

Predictive analysis

Predictive analysis allows you to identify future trends based on historical data . In business, predictive analysis is commonly used to forecast future growth, for example. But it doesn’t stop there. Predictive analysis has grown increasingly sophisticated in recent years. The speedy evolution of machine learning allows organizations to make surprisingly accurate forecasts. Take the insurance industry. Insurance providers commonly use past data to predict which customer groups are more likely to get into accidents. As a result, they’ll hike up customer insurance premiums for those groups. Likewise, the retail industry often uses transaction data to predict where future trends lie, or to determine seasonal buying habits to inform their strategies. These are just a few simple examples, but the untapped potential of predictive analysis is pretty compelling.

Prescriptive analysis

Prescriptive analysis allows you to make recommendations for the future. This is the final step in the analytics part of the process. It’s also the most complex. This is because it incorporates aspects of all the other analyses we’ve described. A great example of prescriptive analytics is the algorithms that guide Google’s self-driving cars. Every second, these algorithms make countless decisions based on past and present data, ensuring a smooth, safe ride. Prescriptive analytics also helps companies decide on new products or areas of business to invest in.

Learn more: What are the different types of data analysis?

5. Step five: Sharing your results

You’ve finished carrying out your analyses. You have your insights. The final step of the data analytics process is to share these insights with the wider world (or at least with your organization’s stakeholders!) This is more complex than simply sharing the raw results of your work—it involves interpreting the outcomes, and presenting them in a manner that’s digestible for all types of audiences. Since you’ll often present information to decision-makers, it’s very important that the insights you present are 100% clear and unambiguous. For this reason, data analysts commonly use reports, dashboards, and interactive visualizations to support their findings.

How you interpret and present results will often influence the direction of a business. Depending on what you share, your organization might decide to restructure, to launch a high-risk product, or even to close an entire division. That’s why it’s very important to provide all the evidence that you’ve gathered, and not to cherry-pick data. Ensuring that you cover everything in a clear, concise way will prove that your conclusions are scientifically sound and based on the facts. On the flip side, it’s important to highlight any gaps in the data or to flag any insights that might be open to interpretation. Honest communication is the most important part of the process. It will help the business, while also helping you to excel at your job!

Tools for interpreting and sharing your findings

There are tons of data visualization tools available, suited to different experience levels. Popular tools requiring little or no coding skills include Google Charts , Tableau , Datawrapper , and Infogram . If you’re familiar with Python and R, there are also many data visualization libraries and packages available. For instance, check out the Python libraries Plotly , Seaborn , and Matplotlib . Whichever data visualization tools you use, make sure you polish up your presentation skills, too. Remember: Visualization is great, but communication is key!

You can learn more about storytelling with data in this free, hands-on tutorial . We show you how to craft a compelling narrative for a real dataset, resulting in a presentation to share with key stakeholders. This is an excellent insight into what it’s really like to work as a data analyst!

6. Step six: Embrace your failures

The last ‘step’ in the data analytics process is to embrace your failures. The path we’ve described above is more of an iterative process than a one-way street. Data analytics is inherently messy, and the process you follow will be different for every project. For instance, while cleaning data, you might spot patterns that spark a whole new set of questions. This could send you back to step one (to redefine your objective). Equally, an exploratory analysis might highlight a set of data points you’d never considered using before. Or maybe you find that the results of your core analyses are misleading or erroneous. This might be caused by mistakes in the data, or human error earlier in the process.

While these pitfalls can feel like failures, don’t be disheartened if they happen. Data analysis is inherently chaotic, and mistakes occur. What’s important is to hone your ability to spot and rectify errors. If data analytics was straightforward, it might be easier, but it certainly wouldn’t be as interesting. Use the steps we’ve outlined as a framework, stay open-minded, and be creative. If you lose your way, you can refer back to the process to keep yourself on track.

In this post, we’ve covered the main steps of the data analytics process. These core steps can be amended, re-ordered and re-used as you deem fit, but they underpin every data analyst’s work:

- Define the question —What business problem are you trying to solve? Frame it as a question to help you focus on finding a clear answer.

- Collect data —Create a strategy for collecting data. Which data sources are most likely to help you solve your business problem?

- Clean the data —Explore, scrub, tidy, de-dupe, and structure your data as needed. Do whatever you have to! But don’t rush…take your time!

- Analyze the data —Carry out various analyses to obtain insights. Focus on the four types of data analysis: descriptive, diagnostic, predictive, and prescriptive.

- Share your results —How best can you share your insights and recommendations? A combination of visualization tools and communication is key.

- Embrace your mistakes —Mistakes happen. Learn from them. This is what transforms a good data analyst into a great one.

What next? From here, we strongly encourage you to explore the topic on your own. Get creative with the steps in the data analysis process, and see what tools you can find. As long as you stick to the core principles we’ve described, you can create a tailored technique that works for you.

To learn more, check out our free, 5-day data analytics short course . You might also be interested in the following:

- These are the top 9 data analytics tools

- 10 great places to find free datasets for your next project

- How to build a data analytics portfolio

educational research techniques

Research techniques and education.

Developing a Data Analysis Plan

It is extremely common for beginners and perhaps even experience researchers to lose track of what they are trying to achieve or do when trying to complete a research project. The open nature of research allows for a multitude of equally acceptable ways to complete a project. This leads to an inability to make a decision and or stay on course when doing research.

Data Analysis Plan

A data analysis plan includes many features of a research project in it with a particular emphasis on mapping out how research questions will be answered and what is necessary to answer the question. Below is a sample template of the analysis plan.

The majority of this diagram should be familiar to someone who has ever done research. At the top, you state the problem , this is the overall focus of the paper. Next, comes the purpose , the purpose is the over-arching goal of a research project.

After purpose comes the research questions . The research questions are questions about the problem that are answerable. People struggle with developing clear and answerable research questions. It is critical that research questions are written in a way that they can be answered and that the questions are clearly derived from the problem. Poor questions means poor or even no answers.

After the research questions, it is important to know what variables are available for the entire study and specifically what variables can be used to answer each research question. Lastly, you must indicate what analysis or visual you will develop in order to answer your research questions about your problem. This requires you to know how you will answer your research questions

Below is an example of a completed analysis plan for simple undergraduate level research paper

In the example above, the student wants to understand the perceptions of university students about the cafeteria food quality and their satisfaction with the university. There were four research questions, a demographic descriptive question, a descriptive question about the two main variables, a comparison question, and lastly a relationship question.

The variables available for answering the questions are listed off to the left side. Under that, the student indicates the variables needed to answer each question. For example, the demographic variables of sex, class level, and major are needed to answer the question about the demographic profile.

The last section is the analysis. For the demographic profile, the student found the percentage of the population in each sub group of the demographic variables.

A data analysis plan provides an excellent way to determine what needs to be done to complete a study. It also helps a researcher to clearly understand what they are trying to do and provides a visual for those who the research wants to communicate with about the progress of a study.

Share this:

Leave a reply cancel reply, discover more from educational research techniques.

Subscribe now to keep reading and get access to the full archive.

Type your email…

Continue reading

How do I write a dissertation data analysis plan?

How do I do dissertation data analysis?

Data Analysis Plan Overview

Dissertation methodologies require a data analysis plan . Your dissertation data analysis plan should clearly state the statistical tests and assumptions of these tests to examine each of the research questions, how scores are cleaned and created, and the desired sample size for that test. The selection of statistical tests depend on two factors: (1) how the research questions and hypotheses are phrased and (2) the level of measurement of the variables. For example, if the question examines the impact of variable x on variable y, we are talking about regressions, if the question seeks associations or relationships, we are into correlation and chi-square tests, if differences are examined, then t-tests and ANOVA’s are likely the correct test.

Discover How We Assist to Edit Your Dissertation Chapters

Aligning theoretical framework, gathering articles, synthesizing gaps, articulating a clear methodology and data plan, and writing about the theoretical and practical implications of your research are part of our comprehensive dissertation editing services.

- Bring dissertation editing expertise to chapters 1-5 in timely manner.

- Track all changes, then work with you to bring about scholarly writing.

- Ongoing support to address committee feedback, reducing revisions.

Level of Measurement

The level of measurement is the second factor used in selecting the correct statistical test. If the research question will examine the impact of X on Y variable, and that outcome variable Y is scale, a linear regression is the correct test. For example, what is the impact of Income on Savings (as a scale variable), the linear regression is the test. If that outcome variable Y is ordinal, then an ordinal regression is the correct test (e.g., what is the impact of Income on Savings (with Savings as an ordinal $0-$100, $101-$1000, $1001-$10,000, variable), then an ordinal regression is the correct test. If the research question examines relationships, and the X and Y variable are categorical, then chi-square is the appropriate test. The main point is that both the phasing of the research question and the level of measurement of the variables dictate the selection of the test. This video on decision trees may be useful.

Statistical Assumptions in Data Analysis Plan

Part of the data analysis plan is to document the assumptions of a particular statistical test. Most assumptions fall into the normality, homogeneity of variance, and outlier bucket of assumptions. Other tests have additional assumptions. For example, in a linear regression with several predictors, the variance inflation factor needs to be assessed to determine that the predictors are not too highly correlated. This data analysis plan video may be helpful.

Composite Scores and Data Cleaning

Data analysis plans should discuss any reverse coding of the variables and the creation of composite or subscale scores. Before creating composite scores, alpha reliability should be planned to be examined. Data cleaning procedure should be documented. For example, the removal of outliers, transforming variables to meet normality assumption, etc.

Sample Size and Power Analysis

After selecting the appropriate statistical tests, data analysis plans should follow-up with a power analysis. The power analysis determines the sample size for a statistical test, given an alpha of .05, a given effect size (small, medium, or large) at a power of .80 (that is, an 80% chance of detecting differences or relationships if in fact difference are present in the data. This power analysis video may be helpful.

COMMENTS

A Data Analysis Plan (DAP) is about putting thoughts into a plan of action. Research questions are often framed broadly and need to be clarified and funnelled down into testable hypotheses and action steps. The DAP provides an opportunity for input from collaborators and provides a platform for training.

This data analysis plan serves as a roadmap to how data collected will be organised and analysed. It includes the following aspects: Clearly states the research objectives and hypothesis. Identifies the dataset to be used. Inclusion and exclusion criteria. Clearly states the research variables.

In fact, even before data collection begins, we need to have a clear analysis plan that will guide us from the initial stages of summarizing and describing the data through to testing our hypotheses. The purpose of this article is to help you create a data analysis plan for a quantitative study.

DATA ANALYSIS PLAN. 1. How it fits in. 2. What it shows. 3. Example. 4. Tips and morals. Henry Feldman Clinical Research Center Boston Children’s Hospital. analysis plan: bird’s-eye view. A good analysis plan is the ultimate demonstration that your whole proposal is well formulated. Specific Aims. Hypotheses. Study Design. Data Collection.

When writing your report, organization will set you free. A good outline is: 1) overview of the problem, 2) your data and modeling approach, 3) the results of your data analysis (plots, numbers, etc), and 4) your substantive conclusions. Describe the problem.